Urban Planning will allow a person who is walking by a construction site to position his or her smartphone in a certain direction and receive an image of how the future building or construction will look like. Then with this information in the hand, the person can be invited to participate in polls or enquires of opinion about aspects related to the work that is being done in the area.

Going one step further, this enhanced reality mobile system can allow a person walking by an area which will soon have work done or being replanned to give their opinion as a vote for one of the options that the local administration is studying. These votes given by citizens can help decision makers select one of the possible options, and satisfy the citizens which have given their feedback.

This initiative would be similar to those available in some places which allow citizens to vote for future urban plans on web pages, but going forward towards mobility and augmented reality. The person walking down the street can vote on what plan he or she prefers or what elements would be necessary. Not only this, but he or she could also get feedback from local authorities telling them in what state their suggestion proceeding is in or how public opinion is being taken into account, percentages of vote, listing of suggested urban furniture or playgrounds, etc.

On one hand this new system allows people to actively participate in their local planning, and on the other, makes citizens part of what is happening around them because they can easily access the plans. Not only that, but a future inclusion of this application in the BiscayTIK system would allow an easy definition of a workflow for the proceeding.

Urban planning for citizens is a benefit as it allows them to participate and interact in an easy and effective way, and at the same time their feedback is taken into account by the local authorities for urban planning issues.

From the point of view of the municipalities, it is a direct channel to receive the opinion of their neighbors and effectively develop plans that are already supported by the public.

George Liaros blog

Participation in Space App Camp, the Netherlands 2014

MobilitySense - Participation in "Apps for Thessaloniki"

MobilitySense is a mobile app that exploits the capabilities of modern smartphones in order to, on the one hand, offer the citizen personalized information about smart ways for commuting during the rush hour, and, on the other hand, turn the citizen into a living sensor of the traffic status of his city.

More specifically, the MobilitySense application offers the following functionalities:

- Uses a map-based interface to inform citizens in real-time about the tram lines that deviate from the scheduled time-plan.

- Offers a personalized view of the city’s traffic situation that includes only the tram lines that are estimated as relevant to the citizen based on his current position.

- Offers the possibility to receive notifications generated from the city’s official regional transport office, about important problems in the city’s traffic network (e.g. Tram line 4 out of service from 15:00 – 17:00 due to maintenance).

- Offers the possibility for the citizens to share written messages (including a photo and GPS coordinates) about unexpected incidents that are likely to influence the good service of the city’s traffic network (e.g. Tram Line 8 delayed due to a car accident) in order to timely inform fellow citizens within the area

- Offers a mechanism for the recording of the daily citizen’s routes when commuting in the city and for (optionally) submitting these routes to the city’s regional transport office in order to contribute in optimizing the city’s traffic network.

- Incorporates Augmented Reality for visualizing the nearby bus stops in an augmented manner and presenting timetable information about the bus lines crossing this stop.

MobilitySense has been developed in the context of the EU-funded project Live+Gov (http://liveandgov.eu) and is currently adopted for the city of Helsinki, Finland. However, its adaptation can be easily done for for the city of Thessaloniki using the set of data that are currently collected and maintained by OASTH.

Improve My City iOS app

Improve My City enables citizens to report local problems such as potholes, illegal trash dumping, faulty street lights, broken tiles on sidewalks, and illegal advertising boards. The submitted issues are displayed on the city's map. Users may add photos and comments. Moreover, they can suggest solutions for improving the environment of their neighbourhood.

Through the application local government agencies enable citizens and local actors to take action to improve their neighbourhood. Reported cases then go directly into the city’s work order queue for resolution, and users are informed how quickly the case will be closed. When cases are resolved the date and time of the resolution is listed, providing users with the sense that the city is on the job.

The application has been developed in close cooperation with local municipalities and groups of citizens. It is therefore a truly user-centered digital application, adapted to the needs of Municipalities and local communities. Moreover, ImproveMyCity is offered as an easy to install and deploy integrated solution, the core part of which is freely available as open source software

The iOS application is demonstrated in the following video

Visual Recognition Prototype for Android

In this application, the result algorithm configuration from this experiment is implemented, for demonstration purposes.

The system receives frames from the camera and after performing the visual recognition tasks it visualizes the predictions' results on the user's screen. The application requires some visual recognition models that are computed offline in a previous stage using svm classifiers and are stored on the phone's local storage.

The system receives frames from the camera and after performing the visual recognition tasks it visualizes the predictions' results on the user's screen. The application requires some visual recognition models that are computed offline in a previous stage using svm classifiers and are stored on the phone's local storage.

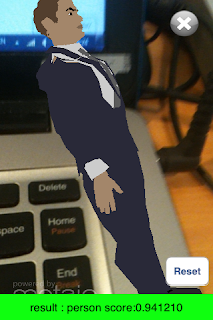

Tangible AR with visual recognition

This application uses server-side visual recognition (send query image to server and get the result) in order to recognize a concept using the phone's camera and render a relevant 3D model on the screen. The user has the ability to zoom in or out on the 3D model using a pinch gesture and to rotate it.

|

| I was recognized as a person (!) |

Location based AR

The following application has been built using the metaio SDK for iOS. The app generates a location-based augmented reality view with content that is extracted from a server. The AR entities can be 3D models, billboards which have the ability to redirect the user to a natively generated information page or link to a page from the web. Different type of AR entities can be also easily adapted, such as video playback, images or animated 3D models.

|

| Billboard View |

|

| Detail page for an AR entity |

|

| Billboard view |

|

| The bridge in 3D View |

Εγγραφή σε:

Σχόλια (Atom)